Handware is important and old ideas work.

Vasik Rajlich (2004/5)

Inside REBEL

Program description

in PDF

Introduction

Although I can't find the exact quote any longer in the CCC archives I remember these prophetic words quite well. The realization changes could be proven by playing 10,000-20,000+ bullet games was part of his rapid progress. And for that one needed of course hardware that was capable of doing that which in those days waan't richly available as it is now.

Secondly that indeed some old ideas rejected earlier as a regression seems to work after all now that testing became more reliable. And in reverse, that in the past programmers made changes which in retrospect weren't improvements after all, but regressions and even worse never were looked back at. All in all it make sense to re-test every change once made now that accurate testing is possible.

In this blog (whiich I hope to update frequently) I will propose a number of ideas, old and new, that worked for me which necessarily doesn't have to work in any other engine, ideas that eventually were small regressions that might work for other engines and ideas that gave such a small improvement (1-2 elo) the LOS remained under 95% which is the line drawn in the sand for me to reject or accept changes.

So here we go.

__________________________________________________________________________________________________

LMR

With LMR we have a number of restrictions not doing LMR because it's too dangerous to reduce. Most known cases, king in check, giving a check, captures etc. I found 2 new ones, one about King Safety, one about Passed Pawns.

King Safety - if the evaluation of the king safety for white is greater than 0.50 and the king safety for white has gone up with 0.25 in comparison with the previous ply then don't LMR. Likewise for black.

Same for passed pawn.

Passed Pawn - if the passed pawn evaluation for white is greater than 0.50 and the passed pawn evaluation for white has gone up with 0.25 in comparison with the previous ply then don't LMR. Likewise for black.

Progress about 5-7 elo, LOS okay.

Note that the 0.50 and 0.25 values were first best guesses and need tuning.

__________________________________________________________________________________________________

A Material Imbalance Table

Recently I released a database of 5 million CCRL and CEGT chess games with the goal to extract ELO from it, after all there are tons and tons of valuable informations in those games especially in games played between high rated engines.

One way to do that is the usage of an material imbalance table as first introduced in Rybka 1.0 beta.

From the 5 million games with a special version of ProTools 2 tables are created -

static int mat_pos [9][9] [3][3] [3][3] [3][3] [2][2]; // wp|bp|wn|bn|wb|bb|wr|br|wq|bq (number of games)

static int mat_perc [9][9] [3][3] [3][3] [3][3] [2][2]; // wp|bp|wn|bn|wb|bb|wr|br|wq|bq (score percentage)

The 2 tables are stored to disk and loaded at ProDeo's program start and then accessed in the evaluation function.

At program start

__________________________________________________________________________________________________

In EVAL

From here there are a whole lot of ideas to tune the evaluation. To name a few:

- The crude way - simply use the percentage (var perc) of the cell, subtract 50 (resulting in a value of -50 / +50) and add it to score.

- The safe way - use only cells that either have a 100 or 0 percentage (var perc) and have at least 10 games played (var pos) and use the number of games as a base for the height of the bonus / penalty.

- Everything in between.

- Instead of an overall evaluation tune only specific parts of the evaluation such as the Bishop Pair, Rook vs 2 minors, Queen vs 3 minors, Rook vs Bishop + pawn, minor vs 3 pawns etc.

__________________________________________________________________________________________________

Notes and download

It's important to realize that in principle cells do not contain reliable information because every cell lacks where the pieces were placed on the board. But say when a cell is based on more than 10 gamesand the percentage (which is always from the white point of view) is >90% or <10% the cell increases in reliability.

Using comp-comp games based on ELO decides the quality of the material table. With a special version of ProTools we created 5 material imbalance tables (MIT) ready to use for experiments following the code above.

The blue statistic in the below table show the cell division by the score percentage, as one can see the 0% and 100% are the most interesting, to see the full range from 0% till 100% click here. As a first try we used the orange settings above using MIT-3100 resulted in a small positive result.

int wp,wn,wb,wr,wq,bp,bn,bb,br,bq;

FILE *fp1,*fp2;

fp1 = fopen("pos.mat","rb");

fp2 = fopen("perc.mat","rb");

for (wp=0; wp<=8; wp++)

for (bp=0; bp<=8; bp++)

for (wn=0; wn<=2; wn++)

for (bn=0; bn<=2; bn++)

for (wb=0; wb<=2; wb++)

for (bb=0; bb<=2; bb++)

for (wr=0; wr<=2; wr++)

for (br=0; br<=2; br++)

for (wq=0; wq<=1; wq++)

for (bq=0; bq<=1; bq++)

{ fread(&mat_pos [wp][bp] [wn][bn] [wb][bb] [wr][br] [wq][bq],4,1,fp1);

fread(&mat_perc [wp][bp] [wn][bn] [wb][bb] [wr][br] [wq][bq],4,1,fp2); }

fclose(fp1);

fclose(fp2); void Material_Imbalance()

{ int wp,wn,wb,wr,wq,bp,bn,bb,br,bq;

int pos,perc;

wp = number_of_white_pawns;

wn = number_of_white_knights; // max=2

wb = number_of_white_bishops; // max=2

wr = number_of_white_rooks; // max=2

wq = number_of_white_queens; // max=1

bp = number_of_black_pawns;

bn = number_of_black_knights; // max=2

bb = number_of_black_bishops; // max=2

br = number_of_black_rooks; // max=2

bq = number_of_black_queens; // max=1

perc=mat_perc [wp][zp] [wn][zn] [wl][zl] [wt][zt] [wd][zd]; // score % for this cell

pos=mat_pos [wp][zp] [wn][zn] [wl][zl] [wt][zt] [wd][zd]; // number of games.Table | Min. ELO | Games used | Cells in use | Perc | 0% | 1% | 2% | 3% | 97% | 98% | 99% | 100% |

MIT-3100 | 3100 | 357.459 | 54.703 | 23% | 9.276 | 93 | 83 | 98 | 161 | 125 | 91 | 13.178 |

MIT-3000 | 3000 | 553.617 | 61.863 | 26% | 10.222 | 94 | 122 | 113 | 316 | 356 | 419 | 14.257 |

MIT-2900 | 2900 | 915.263 | 71.678 | 30% | 12.111 | 137 | 172 | 181 | 271 | 219 | 235 | 16.415 |

MIT-2800 | 2800 | 1.371.273 | 80.429 | 34% | 13.927 | 203 | 220 | 214 | 320 | 328 | 324 | 18.500 |

MIT-ALL | 0 | 5.095.368 | 119.154 | 50% | 22.265 | 805 | 758 | 656 | 918 | 1034 | 1066 | 24.970 |

Download the tables

__________________________________________________________________________________________________

Anti-Shuffle

avoid shuffle pieces

One of the most annoying parts of computer chess is where ProDeo has reached an optimal position having all the pieces on the right squares but doesn't know (lack the knowledge) to make further progress and aimlessly start to shuffle its pieces while each move the score is dropping with a few centi-points until there is no advantage any longer.

I wrote some code to fix this, the function isn't the cure, but it certainly helps. While bullet games gave a small regression a more decent level (40m/60s) gave a nice improvement of 51.5% (+10 elo). Problem is only 1600 games were played and so the status is unclear.

The pseudo code.

void anti_shuffle()

{ int count=0;

int tempo_penalty[] = { 0,0,0,8,16,32,36,40,40,40,40,40,40,40,40,40,40 };

if (end_game) return; // only in the middle game

if (move = WP or BP) return;

if (move = capture) return;

if (move solves an existing threat) return; // cases like h2-h3 Pg4-f6

count++;

// And now we do the same 3 checks for the whole tree for the color that moved

// and count the number of cases they occur and apply a penalty via a table.

score-=tempo_penalty[count];

}__________________________________________________________________________________________________

Evaluation of the Initiative

In chess a sort of golden rule is to create multiple advantages and they together form the base for victory. For instance, if one has pressure on the opponent king plus a good passed pawn plus the bishop pair one usually -- with those 3 advantages -- has a won game. So why not try to teach this principle to the silicon? And it does not work, that is, applying a big bonus gave a big regression but a small bonus might work and will at least made ProDeo's playing style more attractive. The pseudo code for white:

int count=0;

int initiative[] = { 0, 0, 0, 32, 64, 128, 192, 256 };

if (white king is relatively safe)

{ if (king safety for black >= 0.25) count++;

if (king safety for black >= 0.50) count++;

if (white passed pawn eval >= 0.25) count++;

if (white passed pawn eval >= 0.50) count++;

if (mobility for white >= 0.25) count++;

if (mobility for white >= 0.50) count++;

if (white has bishop pair and black does not) count++;

score+=initiative[count];Do the same for black.

__________________________________________________________________________________________________

Piece Square Tables

Before the search starts ProDeo modifies its Piece-Square-Tables with the information from the HISTORY table from the previous search. While doing so will colide with the scores in the Hash-Table the idea still gave good ELO. approx. 10-15 if I remember correctly. Take a look first at the inner part of the HISTORY table before looking at the pseudo code of the algorithm.

void update_pst() // update PST using the history values of the previous search

{ int sq; // square

int pt; // piece type

int hist,pst;

int pst_table[]= {-20,-18,-16,-14,-12,-10,-8,-6,-4,-2,0,2,4,6,8,10,12,14,16,18,20,20 };

if (white material <= ROOK) return; // not in late endgame

if (black material <= ROOK) return;

for (pt=WP; pt<=BK; pt++)

for (sq=A1; sq<=H8; sq++)

{ hist=H[pt][sq]; // get history value (-2000 | +2000)

pst=PST[(pt*128)+sq]; // get pst value

hist=hist/200; hist+=10; // hist as pointer

pst+=pst_table[hist]; // update PST cell

PST[(pt*128)+sq]=pst; // store back

}

}It's probably one of those ideas that will not work with many other engines, the score collisions with the Hash Table are a big issue, trying the idea in the regular search itself by calling the routine after each iteration failed miserabely due to the search instability that comes with it.

__________________________________________________________________________________________________

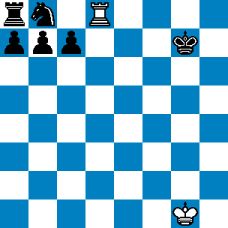

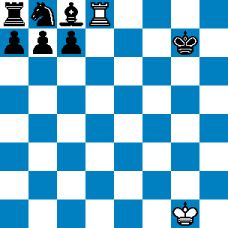

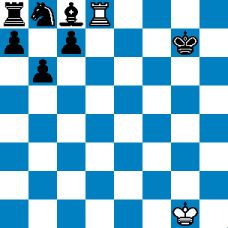

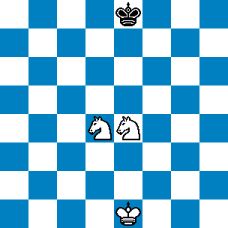

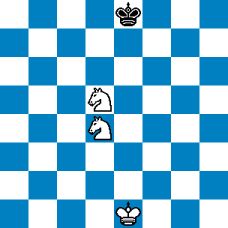

Rook pins 8th row

A bonus of 0.50 is given for white

A bonus of 0.25 is given for white

A bonus of 0.12 is given for white

Situations like these don't often occur in a game but when they do it often are game changers, especially the one in the left diagram, black practically is paralized. I don't have the hardware to test it, let alone to tune the 0.50 | 0.25 | 0.12 values but maybe it's just something one must have.

_______________________________________________________________________________________________

Mobility

Mobility in the old REBEL is explained here where a counter [ for white and black ] serves as a pointer to the mobility table producing the final mobility score. Years later in ProDeo splitting the mobility counter into white and black gave a nice improvement. It's now -

score+=table[white_count];

score-=table[black_count];

It's much more aggressive this way and I had to lower the values of the mobility table before I got the improvement I was looking for.

_______________________________________________________________________________________________

Reductions

Rebel vs ProDeo

The old REBEL and its selective search had 5 different types of static reductions (or as some like to label them as static nullmove) but with the change to full nullmove a couple of years ago only one survived [2d] but with considerable changes and a new one was introduced.

About the new one, it's a complicated one but it gave more than good ELO (20-30) but it needs specific information, namely the 2 highest hanging pieces of the position, usually called SEE.

The idea behind the reduction is simple, when 2 pieces are hanging then in 90+% of such cases one of those will be lost, likely captured in the next ply also. So why not reduce such instances if the evaluation score is also not so good? And so we calculate a penalty for the 2 highests hanging pieces, subtract it from the evaluation score and if the evaluation score is below ALPHA we reduce.

Needless to say the usual exceptions apply, we don't reduce checks, when king_in_check, winning captures (SEE>0) etc.

_______________________________________________________________________________________________

Some small stuff

- Right from the chess books, in a Bishop vs Knight ending, when both sides have pawns on both flanks and the center is open (no pawns) the color with the bishop has a clear advantage. The books don't say how to express that clear advantage into a number and so I gave it the value of 0.25 as an educated guess.

- Also from the chess books, a knight outpost should be covered with at least one of its own pawns, preferable two. And oddly it never gave an improvement, just the knight outpost only gave ELO. Curious about this I found a candidate in TOGA, removed the pawn coverage code and surprise, it scored better.

- Rook imbalance, situations like WRe1 | WPe4 | BPe5 are bad ideas, that white rook is doing nothing, a small penalty is given.

- Rooks, attack pawns from behind, especially in endings.

- Blocking a weak with a knight is usually a good idea, a small bonus is given.

_______________________________________________________________________________________________

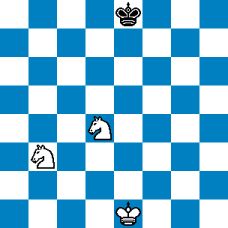

Cooperative Knights

Usually cooperating knights -- such as in diagram 1 & 2 -- are good while the knight pair in diagram 3 looks counter productive. Annoyingly enough applying a bonus for situations as in diagram 1 & 2 never produced ELO, same for applying a penalty for diagram 3 situations. Maybe you have better luck.

_______________________________________________________________________________________________

PV extension

or Hash Table move extension

Last time I checked the PV (principal variation) extension was good for some 20-30 ELO but based on bullet games, it's not unlikely there will be a diminishing return on longer time controls. The logic behind the extension is that it's maybe not a bad idea to extend a variation with 4 moves from the hash table.

Example, imagine we are searching the start position after the 7th iteration the main variation from the hash table is:

1.e4 e5 2.Nf3 Nc6 3.Bb5 a6 4. Ba4

Then on iteration 8 we count 4 moves from the hash table and extend one ply, two plies if the counter is 8, etc. To avoid that only the main variations are extended we count pairs of consecutive hash table moves. The pseudo code.

if (current_move==TT_move && previous_move==TT_move && move not already extended)

{ count++;

if (count==4) { extend(); count=0; }

}____________________________________________________________________________________________

(Early) Opening Tuning

After the stunning outcome of the first match in the shorties festival (work to do for Komodo team!) I decided to do some experiments to improve the early opening. And it's relatively quite simple provided that you have a PGN parser of your own.

From highly qualified games a (sort of) hash table is created for the first 20-30 moves and then in EVAL (root-moves only) a bonus (valid for the tree it creates) is given if the move is available in that HT.

Self-play (PGN up to 5 moves) gave 10-15 ELO, self-play (PGN up to 10 moves) gave 5-10 ELO.

Another idea would be to apply the technique throughout the whole tree but I doubt the sense of that.

_____________________________________________________________________________________________

Creating meaningful PST's from PGN

A new tool is available that creates the 12 Piece Square Tables (PST) for the MiddleGame from a PGN database. The tables can be used for 1) evaluation, 2) move ordering, 3) pruning and reduction decisions.

- Example-1 : PST's made for the Middle Game from a 82.000 human game collection between players with a minimum elo rating of 2600.

- Example-2 : Same but now for the End Game.

Output is stored in PST.TXT including ready to use C-code.

Go to the download page, install and start SOMU 1.7a, press [NUM-KEYBOARD 1] to create the midgame PST's or [NUM-KEYBOARD 2] to create the endgame PST's using the ccrl-404-3300.pgn example file.